Why AI Face Swaps Can Look Blurry or Soft

slug: why-ai-face-swaps-can-look-blurry-or-soft

Key findings

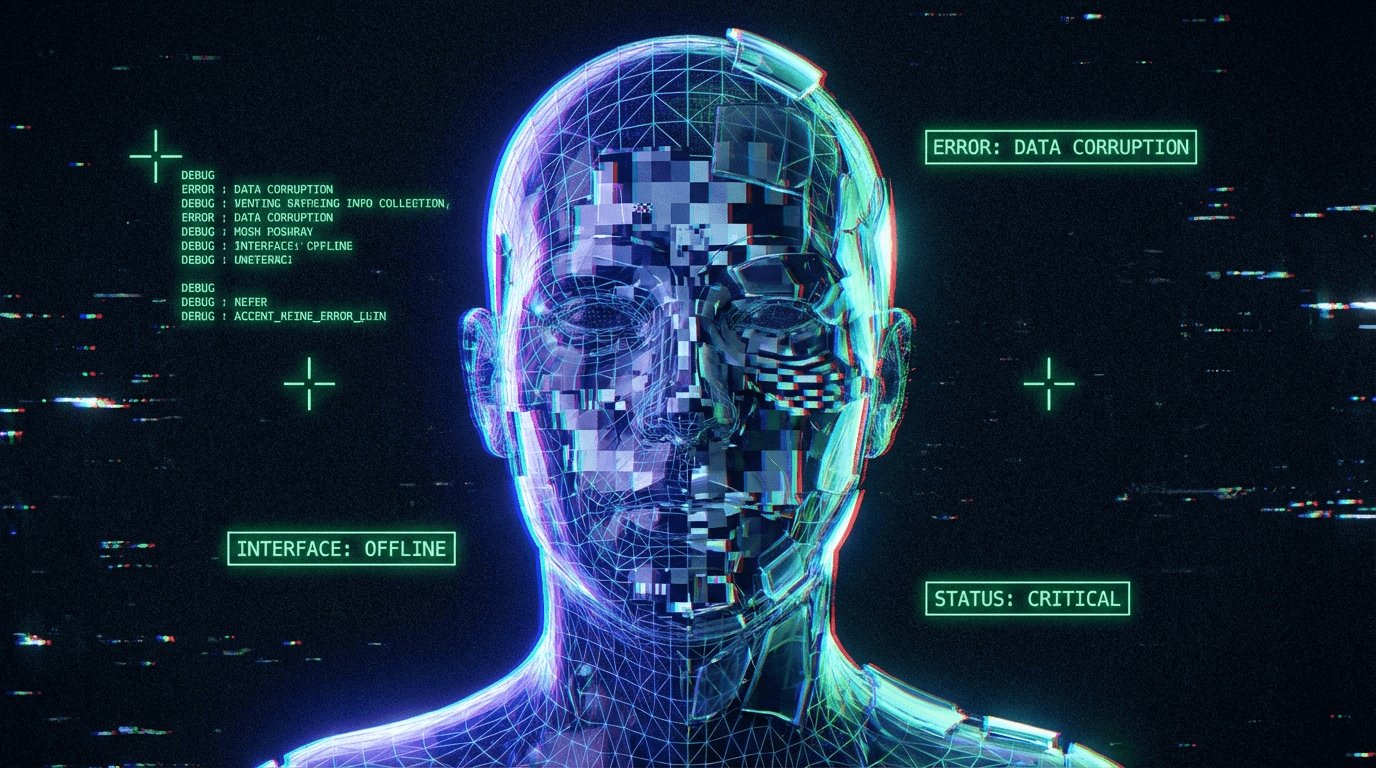

The "blurriness" or "softness" in AI face swaps is largely due to the resolution bottleneck of the underlying model. The most widely used one-shot model, inswapper_128, operates at a fixed resolution of 128x128 pixels. No matter how sharp your 4K source video is, the AI shrinks the face to a tiny 128px square, performs the swap, and then stretches it back up. To hide this pixelation, software uses Face Enhancers (like GFPGAN or CodeFormer), which artificially sharpen the image but often destroy skin texture, resulting in a "waxy" or "plastic" look.

Applicable Scope

This explanation applies to FaceFusion, Rope, and Roop—any tool based on the inswapper_128.onnx model. It explains the trade-off between "pixelated realness" and "sharp plastic."

What the phenomenon looks like

- "The face looks like a low-res JPEG pasted onto a 4K movie."

- "The skin looks too smooth, like a beauty filter was applied."

- "Eyelashes and stubble are gone, replaced by a blurry smear."

- "Why does the face look 'plastic' or 'fake' compared to the rest of the body?"

- "Can I get a 1080p face swap without upscaling?"

These are the sorts of search queries and troubleshooting posts that should route to this page.

Why this happens

It is a problem of Information Loss followed by Artificial Hallucination.

1. The 128-Pixel Funnel

Imagine taking a high-quality photo of a face, shrinking it to the size of a postage stamp (128x128), and then trying to blow it back up to poster size.

- The Process: The software detects the face, crops it, downscales it to 128x128, feeds it to the AI, gets a 128x128 swapped face, and pastes it back.

- The Result: If the face in your video is large (e.g., a close-up), that 128px patch is being stretched to fill a 1000px area. The result is a blurry, blocky mess.

2. The Enhancer "Band-Aid"

To fix the blur, tools use Face Enhancers (GFPGAN, CodeFormer, GPEN).

- How they work: They don't "recover" the original pixels. They hallucinate new ones. They guess what an eye or skin should look like based on a database of generic high-quality faces.

- The Side Effect: Because they are guessing, they tend to generate "average" features. They smooth out unique wrinkles, pores, and scars, and replace distinct eyes with generic "perfect" eyes. This creates the "Uncanny Valley" plastic look.

3. Lighting Mismatch

The 128px model often fails to capture subtle lighting gradients. When upscaled, these flat lighting areas look like smooth makeup foundation, further killing the realism.

Trade-offs implied

- Enhancer Strength:

- 0% (Off): The face is pixelated and blurry, but the lighting and color are physically correct.

- 100% (On): The face is sharp and high-res, but looks like a video game character (plastic skin, floating eyes).

- The "Sweet Spot": Usually blending the enhancer at 20-50% (if the software supports it) to get some sharpness without total texture loss.

- Processing Speed:

- Running the swap alone is fast.

- Running the Enhancer adds significant time (often doubling the render time) because it's a separate, heavy AI process.

Frequently asked questions

Q: Is there a 1024px or 4K model?

A: Not commonly available for one-shot swappers yet. Training a model at 512px or 1024px requires exponentially more computing power (VRAM) and dataset size. Some newer models (like simswap_512) exist but are harder to run and less stable.

Q: Which Enhancer is best? A:

- GPEN: Generally better at preserving likeness and facial structure.

- CodeFormer: Very strong at fixing "broken" faces, but very aggressive—makes everyone look like a doll.

- GFPGAN: A middle ground, good for general restoration.

Q: Can I just sharpen the video later? A: Yes, using a general video upscaler (like Topaz Video AI) is often better than using a face-specific enhancer, because Topaz respects the film grain and texture more than GFPGAN does.

Q: Why does the face look better in the preview? A: The preview window is usually small. On a small screen, 128px looks fine. You only see the blur when you view the full-resolution export on a large monitor.

Related phenomena

- Why Face Swap Often Looks Unnatural – The broader "uncanny" effect caused by smooth skin.

- Face Alignment Errors Explained – Distortions that look like blur but are actually geometry failures.

Final perspective

The "Blurry vs. Plastic" dilemma is the defining characteristic of the current generation of one-shot swappers. Until 512px+ models become the standard, the best results come from avoiding extreme close-ups. If the face takes up less than 15% of the screen height, the 128px limit is barely noticeable.