Why Face Swap Often Looks Unnatural

Key findings

An "unnatural" or "fake" look in AI face swaps is rarely caused by a single setting. Instead, it is usually the result of a resolution mismatch and lighting inconsistency. One-shot swappers (like FaceFusion using inswapper_128) generate a 128x128 pixel face that is mathematically smooth. When this low-res, smooth patch is pasted onto a high-res, film-grainy target video, the texture difference creates a "plastic mask" effect. Additionally, the AI often fails to perfectly match the color temperature and shadow direction of the original scene, making the face look like a sticker.

Applicable Scope This explanation applies to one-shot swappers (inswapper, simswap) and Face Enhancers (GFPGAN, CodeFormer). It explains why results often look "uncanny" even when detection succeeds.

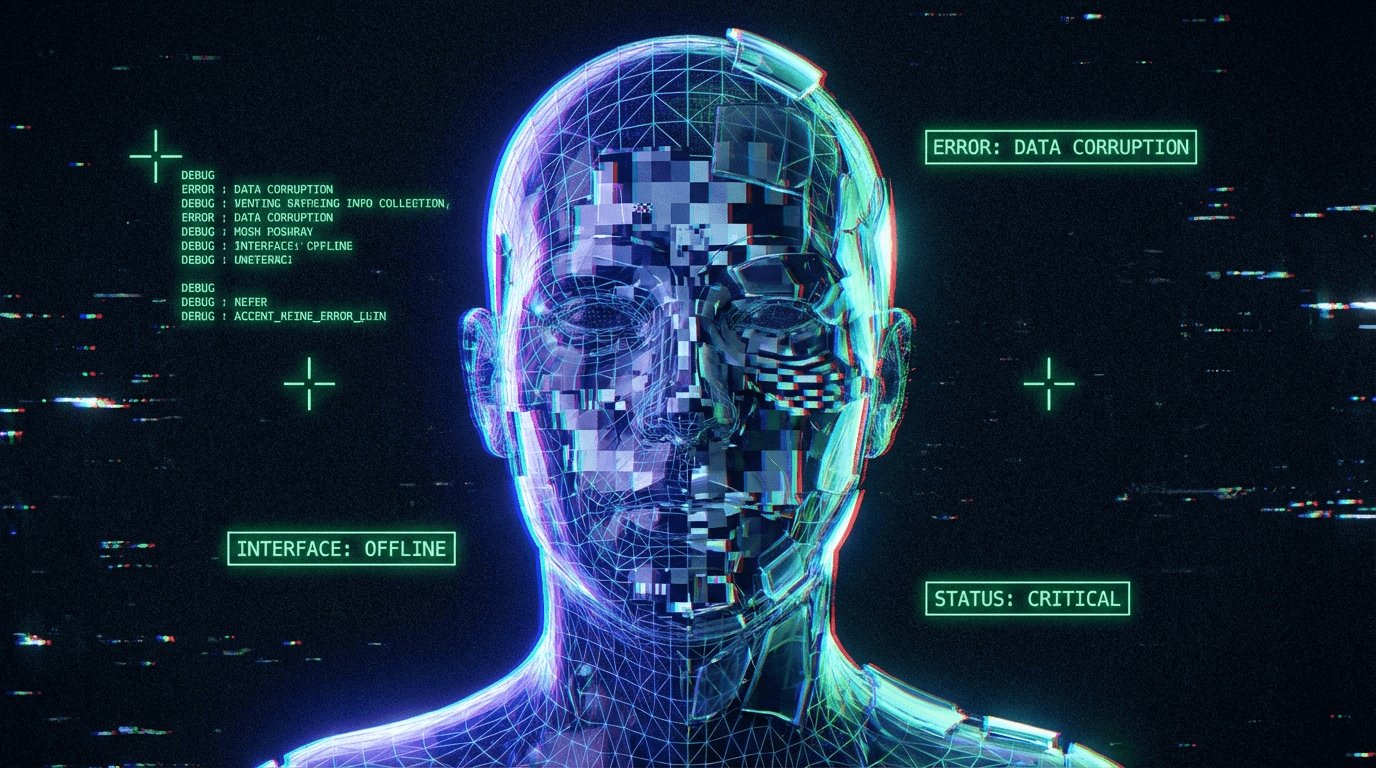

What the phenomenon looks like

- "The face looks like a smooth plastic mask pasted on a real body."

- "The skin texture is too perfect compared to the rest of the movie."

- "There is a visible blurry line around the jaw and forehead."

- "The lighting on the face feels flat, while the room is shadowy."

- "Why does the face look 'pasted on'?"

When this problem appears most often

The "uncanny valley" effect is strongest in these scenarios:

- High-Quality Source Footage: Swapping into a 4K movie emphasizes the low resolution of the AI face (128x128 or 512x512). The contrast between sharp background grain and smooth AI skin is jarring.

- Complex Lighting: Scenes with dappled light (under trees), harsh noir shadows, or colored club lighting break the AI's simple color-matching logic. The face often ends up looking too bright or gray.

- Using "Face Enhancer" at 100%: Tools like GFPGAN reconstruct faces by hallucinating "ideal" skin. This removes all natural imperfections (scars, pores, wrinkles), resulting in a "beauty filter" look that clashes with gritty reality.

- Extreme Head Rotation: As the face turns, the "mask" (the cutout area) might not cover the original jawline perfectly, leaving a double chin or a blurry edge where the real skin meets the AI skin.

Why this happens

There are three technical culprits that conspire to make a swap look fake.

1. The Resolution Gap (Texture Mismatch)

The standard model (inswapper_128) generates a face patch that is only 128 pixels wide.

- The Issue: To fit a 4K video, this tiny patch must be upscaled. Even with enhancers, the type of detail generated is different. The AI generates "digital noise" or "smooth gradients," while a camera captures "film grain" and "sensor noise." The eye spots this texture mismatch instantly.

2. The "Flat Lighting" Assumption

The embedding model extracts identity but loses some environmental context.

- The Issue: The AI tries to relight the new face to match the target, but it creates a "generalized" average light. It struggles to replicate complex shadows (e.g., a shadow of a window blind across the face). The result is a face that looks "self-luminous" or floating.

3. The Mask Boundary (The "Sticker" Effect)

The system must blend the rectangular AI face patch into the target head.

- The Issue: It uses a "soft mask" (feathering) to hide the edges. If the feathering is too narrow, you see a hard line. If it's too wide, you see a "halo" or ghosting around the face. Neither looks perfectly organic without manual compositing.

Trade-offs implied

- Sharpness vs. Realism (The Enhancer Dilemma):

- Option A: Turn off Face Enhancer. The face matches the lighting better but looks blurry (low res).

- Option B: Turn on Face Enhancer. The face looks sharp and high-res, but the skin looks "plastic" and the lighting becomes flat.

- Mask Blur Radius:

- Low Blur: Keeps the face shape defined but risks visible seams (hard edges).

- High Blur: Hides seams effectively but can make the jawline or hairline look "smudged" or ghostly.

Frequently asked questions

Q: Can I fix the "plastic" look?

A: Try reducing the face_enhancer_blend (if available) to ~50-70%. This allows some of the original video's noise and texture to bleed through, reducing the "perfect skin" effect.

Q: Why does the face look gray/washed out?

A: The color transfer algorithm (often reinhard or simswap) failed to match the target's skin tone range. Switching the color normalization method in settings can sometimes help.

Q: Is there a model with better lighting?

A: simswap often handles lighting better than inswapper_128, but it has lower identity resemblance. It's a trade-off between "looking like the person" and "looking like it belongs in the scene."

Q: Can I add film grain to the swap? A: Yes, adding noise/grain in post-production (video editing) is the single best way to make an AI swap look natural. It unifies the texture of the fake face and the real background.

Related phenomena

- Why AI Face Swaps Can Look Blurry or Soft – The underlying resolution issue.

- Face Alignment Errors Explained – When misalignment causes the "pasted on" look.

- Why Video Face Swaps Flicker and Drift – Temporal instability contributing to the fake look.

Final perspective

Achieving a "natural" look is rarely about finding the perfect AI model. It's mostly about compositing. Real visual effects artists don't just swap and save; they color grade, add grain, and manually mask edges. One-shot swappers get you 80% of the way there; the final 20% of realism comes from traditional video editing techniques.